6 Technical SEO Tips to Improve User Experience and Skyrocket Your Website’s Rankings

We all know that for a website to perform well, we need a strong SEO strategy.

When we think of SEO, we mostly fixate on the on-page SEO like optimization of content and off-page SEO like link-building for our website.

But we generally do not bother with the most fundamental technical SEO adjustments that a website needs to go through for all the other on-page and off-page SEO to take effect.

So here in this post, I am going to discuss a few technical SEO factors of your website, which are crucial for the efficient crawling and indexing of your site by search engine crawlers.

Without a doubt, getting your technical SEO just right will guarantee improved search engine rankings and more organic traffic flow to your website.

So, before you go about ideating innovative and viral content strategy for your website, you have to go through your site with a fine-tooth comb, establish a technical SEO checklist of the essential to-dos and then fix them right up.

Once you have gone through the pain of improving your site’s technical SEO for optimum results, you are done worrying about it anymore. Now, you can go ahead and work on your on-page and off-page SEO plan, follow Google’s rules, and rake in more visitors and sales equally.

Tweaking technical SEO may sound like a job for the web developer because being a digital marketer does not mean that you need to know how to code or create a website from scratch.

But thankfully, technical SEO is not that much of a rocket-science for a digital marketer. Moreover, it is something that every search engine optimizer should know how to implement as the first step towards boosting the SEO of their websites.

In this article, I am going to list all the important technical SEO tips that are necessary to strengthen the SEO of your website, how to go about doing them, and the benefits that your website will reap from implementing the technical SEO correctly.

But before jumping into all of that, let’s understand what technical SEO really is.

What is Technical SEO?

Technical SEO is the process of optimizing your website so that search engine crawlers can efficiently access your website, crawl and index its contents accordingly. However, it does not deal with the content optimization of the site or its promotion. The main goal is the optimization of the architecture of the website, instead.

Though this is a crucial step towards achieving your SEO goals, please bear in mind that technical SEO alone cannot do anything. It should be combined with on-page and off-page SEO to get the desired results.

There are mainly 6 important tweaks in a website’s architecture that can boost the technical SEO game. And they are:

- Creating an XML sitemap

- Modifying the Robots.txt file

- Canonical tags

- Proper redirection of pages

- Customized error pages

- Preferred domain version

Most of these changes need to be done only once while setting up a website or creating a new page. They are powerful tweaks that sometimes require alteration of the source code or directives, so please be very careful while doing them. If you are not confident enough, solicit the help of a web developer or a more experienced SEO to make the changes.

That being said, let’s delve into each of the points mentioned above a little deeper.

Create an XML Sitemap

Imagine you are selling a house, and the potential buyers want a walkthrough. Would you let the buyers walk around all by themselves around the property?

The answer is “No”.

You would instead walk along with the buyer as a guide, show them around the house, point out the features and the attractive amenities so that they get the full image of the property.

This, in turn, will help the buyer make the decision whether they will purchase it or not.

Similarly, in technical SEO, your website is the house, and your buyer is the search engine crawler to which you are presenting your site for indexing.

In this case, the XML sitemap will work as a guide, which will help the search engine crawlers understand how your website is organized.

The sitemap is the one place where all the important URLs of your website must be located for easy crawling by the search engine bots. The URLs must have the published date and the last updated date along with them as well. This helps search engines identify and re-index updated content quickly.

Google now is more than capable of crawling and indexing your whole website even without a sitemap. So, why do you still need one?

- Crawling and indexing are made easier with a sitemap. Why make Google work harder than necessary? Instead, help them out by creating a proper guide for your website.

- There is a sitemap section in the Google Search Console tool. This indicates how much Google still appreciates a well put together sitemap for any website. And we all know, happy Google means better ranking for your website.

A sitemap is well and good, but an optimized sitemap is what you want for your website. The main to-dos for optimizing your sitemap are:

- Only the pages and posts that are important to the website must be present in the sitemap.

- Don’t include pages that do not have unique or significant content in them like category and tag pages.

- Your sitemap should be dynamic in nature, meaning it should constantly update itself as the new contents get published on your website. If a new webpage is created, then the new URL should be added to the sitemap. Similarly, if an existing page is modified, then the last updated date should be updated accordingly in the sitemap file.

- Limit the number of sitemap entries to as short as 100s to max 1000s. However, Google is good with a sitemap file with 50000 entries, which has less than or equal to 50 MB uncompressed size.

Creating sitemaps for your website can be done through plugins or tools if you have a CMS website. For WordPress sites, there are quite a few choices for sitemap generators, namely Yoast SEO, Google XML Sitemaps, Simple XML Sitemaps, Companion Sitemap Generator, etc.

There are also free sitemap tools available online for generating sitemap files for non-WordPress, especially HTML or PHP websites.

To submit and check the status of the sitemap of your website, you can use Google Search Console and Bing Webmaster tools as required.

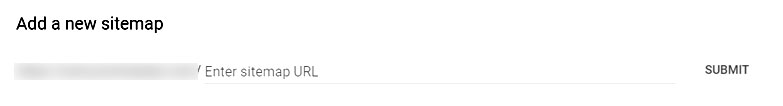

Login to Google Search Console tool, visit the sitemap section and add the sitemap files there. After you add, periodically check the “Submitted sitemaps” section to check the last-read date, sitemap status, and fix the errors Search Console is facing.

Robots.txt File

As the name suggests, the robots.txt file, located in the root directory of your website, is solely present to provide directives to the search bots that visit your site.

The crawlers first access robots.txt file to find out if they are allowed to crawl the website. So, the main parameters present here are allow: / and disallow: /.

According to your requirement, you can either disallow crawlers to crawl the file or a directory or allow them to do so.

The default robots.txt file can be modified to block particular files or pages of your website, which you do not want Google or other search engines to crawl.

For example, if you want to allow the entire website for crawling except the plugins directory under wp-content, then you have to write:

User-agent: *

Allow: /

Disallow: /wp-content/plugins/

The “user-agent” here specifies the name of the search bot that will follow the directives and act accordingly. “*” means all the crawlers (wildcards). User-agent: Googlebot means directions for Google bot only.

Here is the list of all available names and values for the “user-agent” directive. (https://www.robotstxt.org/db.html).

When a website is under construction or undergoing a redesign, ideally, the crawl-parameters in the robots.txt file should be set to disallow: / for the entire site. Like:

User-agent: *

Disallow: /

So, be sure to remove that before launching your website live on the web.

Next, the location of the XML sitemap file of your website can also be specified in the robots.txt file. The directive will look like:

User-agent: *

Allow: /

Sitemap: https://www.example.com/sitemap.xml

However, if you do not specify the location of the sitemap file, search engines will still be able to find it. So technically, this is an optional directive.

Be very cautious while modifying a robots.txt file. If you are doing it wrong, then it can affect your website’s performance adversely. The three main scenarios of error and their consequences are:

- The robots.txt file should always be present in the root directory of your website’s path. If not present, search engines may assume that everything on your website is publicly available, and they are allowed to crawl them all. In this case, all the webpages that you do not want to get crawled will become fair game for crawlers.

- Your robots.txt file should be properly formatted so that search engines do not have any problem understanding the directives. If your robots.txt file is not legible to search engines, then they will ignore it and may crawl everything just like with no robots.txt file.

- If by mistake you block (disallow) any resource from the robots file, then search engines may not crawl them. However, if the search engine finds an external source to crawl the blocked resource, they may crawl, and even index it.

Using robots.txt file can come in handy in many ways for your website. They are:

- To make indexing faster for your website. If your site is very resource heavy, then it can take ages to index it on its entirety. In such a case, you can use the robots.txt file to limit what could be crawled. You can exclude the files or sections of your website that are not important for SEO, thus reducing indexing time without any impact on your site’s rankings.

- To prevent the indexing of pages that do not contain any original content or a page that is under construction and is not SEO ready for crawling yet.

- To prevent the indexing of duplicate landing pages created for paid marketing with the same intent but with different offers and discounts. Allowing search engine crawlers to index all of them can weaken the organic ranking of the main page substantially. So, block all of the other pages except the original one.

One important thing to keep in mind is that, while blocking anything in the robots.txt file, it doesn’t guarantee that the blocked resource won’t show up in search engine result pages. If there are links pointing to the blocked pages from internal or external pages, then search engines may follow the links, crawl the blocked pages, and index them eventually.

So, if you really want to block a page from ranking, you can either password protect the page, or add the directive <meta name="robots" content="noindex" /> somewhere between the <head> and </head> of the page.

For a non-WordPress HTML or PHP website, for making changes in the robots.txt file, you can download the file using an FTP client, open it using a text editor, make the required modifications and upload the updated file when done.

For a WordPress website with Yoast plugin, you can access the robots.txt file directly by visiting the Yoast SEO tools > File editor > robots.txt.

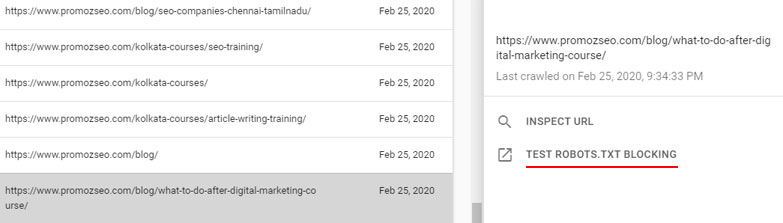

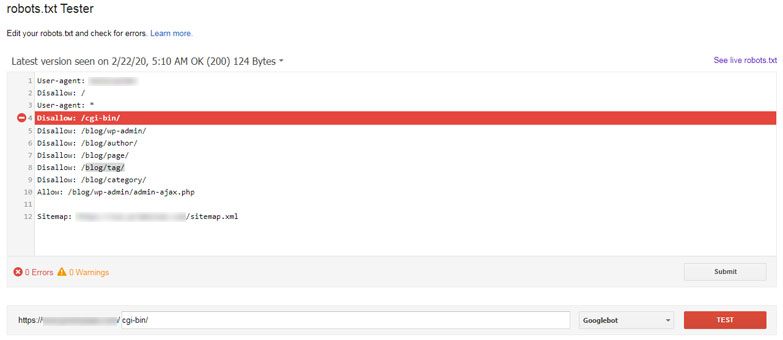

Finally, the best way to test and validate the robots.txt file is through the “robots.txt Tester” option in the Google Search Console tool. It comes under the “Coverage” section, just click the “Test robots.txt blocking” link at the right-hand side panel.

If a page or the resource is not blocked, the test button label will change to “Allowed” while the color will turn green. If not, the line of the directive that is causing the block will be highlighted along with turning the test button label to “Blocked”.

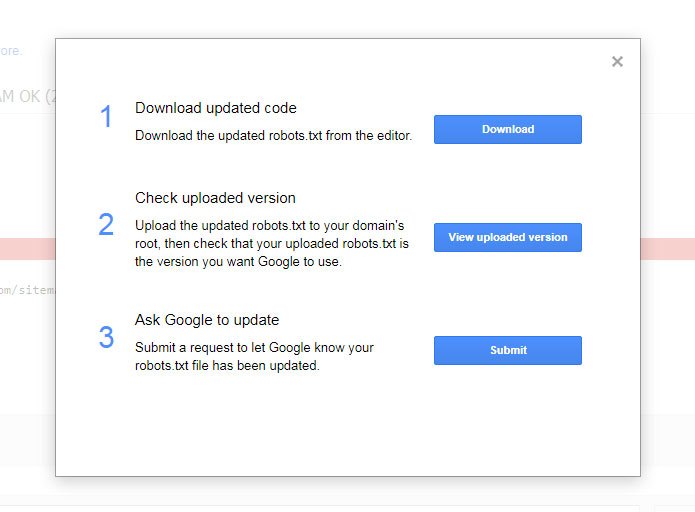

Once you are done modifying your robots.txt file, you have to test it as described above and then inform Google about the changes. This can be done by clicking the “Submit” button in the robts.txt tester screen and choosing the third option (Ask Google to update: Submit a request to let Google know your robots.txt file has been updated) in the popup window.

Canonical Tags

In a website, the presence of multiple URLs for the same web content is common. This happens especially if you are using CMS or URL rewriting techniques. This, in itself, is not a big performance-inhibitor for the website.

But, when it comes to search engine crawlers, these multiple URLs for the same content will be considered as individual pages and, thus, will be tagged as duplicate contents.

Crawling and indexing duplicate content is a significant resource drainer. So, Google and other search engines are very particular about their existence and charge severe penalties if they encounter such things heavily on a website. Therefore, duplicate content will nosedive your website’s ranking and can get your site penalized.

The solution to the unwanted “duplicate content” problem is the usage of canonical tags. These tags added at the <head> of a page tell search engines the preferred URL to index for the original piece of content.

Search engine crawlers index the URL present in the canonical tag and ignore the rest opening the same content.

As a rule, all pages should have a canonical tag. It can be self-referencing, meaning specifying its own URL for indexing or a different URL pointing towards the original content.

A canonical tag looks like this:

<link rel="canonical" href="https://example.com/yoast-seo/" />

Here, the link referenced in the tag (“https://example.com/yoast-seo/”) is the URL that needs to be indexed for the original content.

Some crucial reasons why the canonical tag is required are:

- If your content is duplicated by someone else, then the canonical tag will tell the search engines which one is the original content (provide they have pointed the original content URL in their canonical tag).

- If you are for any reason republishing your content on other platforms, canonical tags can be used to let search engines know that this is a copy of the original content and inform them what the original URL is.

- Websites that can be accessed with both www or without, and http and https, are considered as different sites by search bots. To let Google know the preferred domain version of your website, you can use the canonical tag. Use the same URL format for all your canonical tags. If you are using https and www, then stick with that throughout your website. Consistent canonical tags help Google recognize your preferred domain.

Now that you know why you should use canonical tags let’s list some important to-dos and not to-dos regarding them.

- Each and every page of your website should have a canonical tag.

- Add only one canonical tag for each page.

- Specify the canonical tag in the head section of your webpage, between

<head>and</head> - The homepage of a website should always have a canonical tag specifying the exact preferred domain version. (For example, https://www.example.com/ or https://example.com/ or http://www.example.com/ or http://example.com/)

- All internal page canonical tags of a website should specify the same domain format as on the homepage.

- In case you are not using a self-referencing canonical URL, always make sure that the URL you are mentioning in the tag is a valid one.

- The URL in the canonical tag should never have the “noindex” robots tag. This essentially means that you are specifying a blocked URL in the canonical tag.

- Do not point a canonical tag to a page URL that does not have a self-referencing canonical URL. This will create a canonical chain which will confuse crawlers and lead to potential troubles.

Proper Redirection

When you delete a page or change a URL to a newer one, the older becomes nonexistent. This will lead a visitor trying to open the older page URL to a no man’s land with a “404 page not found” message displayed on the screen. When this not only upsets the user experience, this creates lots of difficulties for search engines as well.

If such a page continues to be nonexistent for a long time, search engines like Google may consider de-indexing it, which may cause severe loss to the respective website.

To remedy this double-sided problem, we use URL or page redirection.

Page redirects are used to inform search engines that a webpage has been moved to a new location. For SEO purposes, mainly two types of redirects are used, namely 301 and 302.

The 301 redirection is used when a page has been permanently moved to a new URL. This redirect helps search engines de-index the old URL and index the new URL while transferring the existing PageRank without any significant dilution from the old URL to the new one.

Redirect 301 /services.html https://www.example.com/seo-services.html

Here, “services.html” specifies the old page (nonexistent URL), and the “https://www.example.com/seo-services.html” is the absolute URL of the new page.

Unlike 301 redirects, 302 redirects are used when a page has been temporarily shifted to a new URL. This mostly happens when a webpage goes under maintenance, and the content is temporarily moved to a new URL for continued user access. Once the maintenance period is over, the content will be restored to its original URL. The 302 redirects do not permanently transfer the PageRank juice from the old to the new URL.

Redirect 302 /seo-services.html https://www.example.com/under-maintenance-seo-services.html

In scenarios where we find that a particular landing page is performing better than the others (more traffic and conversions), we can use 302 redirects to shift the under-performing URL to the winning page temporarily. Be careful, though. Don’t use this technique to deceive the user; else, Google can penalize the website big time.

If you are using a WordPress website, you can use a plugin for redirecting a URL. If not, you can use the Htaccess file through the cPanel of your website (cPanel -> file manager -> root -> Htaccess file) to redirect URLs.

Few things to keep in mind while creating these redirects are:

- In the case of 301 redirects, it is important that the page redirects to the final destination. If you want to redirect page A to page C, do not redirect page A to page B and then from page B to page C. This creates a redirect chain that confuses crawlers and is poor for site’s loading speed optimization. The best practice is to redirect page A to page C directly.

- Try to redirect nonexistent pages to existing, relevant, and internal pages and avoid redirecting all of them to the site’s home page.

- Glitches in creating redirects through the Htaccess file can cost your website. So, if you are not sure, take a backup before doing it.

- Periodically audit your website to identify the new 404 pages, redirect chains, etc. using tools like Google Search Console and ScreamingFrog.

Custom Error Pages

Now that you know when and how to redirect the 404 nonexistent pages, you may think that the problem is solved once and for all. However, that is not the case.

What if you remain unaware of a few 404 pages that continue to lead a big chunk of your visitors to blank pages that don’t offer any internal links to browse the website? What will happen?

Nothing but the visitors will exit your website, increasing its bounce rate. Not only this, but you are going to incur a continuous monetary loss, as well as Google, may demote your site’s ranking due to the high bounce rate.

So, to cure this problem, you need to first understand the reasons behind these nonexistent 404 pages.

You already know the two main reasons from the previous point.

- First, maybe you deleted one page of your website, which made the URL nonexistent.

- Second, maybe you modified one existing URL that changed it permanently, making the older one nonexistent.

But there is a third one.

There could be a backlink of your website most likely not built by you, which is pointing to the wrong URL of your domain. When someone clicks the link, this will lead the person to a 404 page eventually. The worse thing is this is going to happen frequently as your website becomes popular, and you cannot stop this from occurring.

And you will get to know about these 404 pages through Google Search Console or ScreamingFrog tools, but only when you check them.

The 404 error pages increase bounce rate, negatively impact websites’ ranking, and decrease conversions and sales. So, you need to solve this problem quickly and forever, so that even if you do not redirect a nonexistent page, still the visitors don’t bump into a browser-default 404 page.

And the solution to this problem is the custom error pages.

WordPress websites have optimized error pages by default, but if you do not have one, you can edit the error pages yourself to best suit your purpose.

For a non-WordPress site, use the below code on the Htaccess file.

ErrorDocument 404 /errorpage404

Here, “ErrorDocument” is the directive, “404” is the type of the error, and “errorpage404” is the custom error page (file) name.

The important points to consider while creating and optimizing a custom error page are:

- It should have the same structure as well as the navigation menu as the website. This helps users to navigate the site better when they encounter a 404 page.

- The custom error page should have a user-friendly language to let visitors know that what they are looking for is no longer available.

- Suggestions with alternative URLs should be present in the custom error page to help users satisfy their queries and to restrict them from leaving your website.

- Each custom error page should have easy navigation back to the homepage and to other relevant pages, for the ease of users to keep browsing your website.

- Additionally, adding search functionality could be very helpful for the site as well as its users.

Following the above directions while creating custom error pages will help you retain the traffic and won’t impact your SEO much, even if you have a few 404 error pages not redirected yet.

Preferred Domain Version

A default website can open with a couple of variations of URLs like example.com or www.example.com. Both these URLs will take users to the same content, so it is not a big deal with regards to user experience. But when it comes to search engines, this is a big no-no.

Search engine crawlers will consider the two URLs above as separate web properties, where both lead to the same content, which will land your website in a hot pile of mess SEO-wise.

To prevent this, you need to specify the preferred domain version of your website from the very start. Search engines will then consider and index your set domain and disregard the rest.

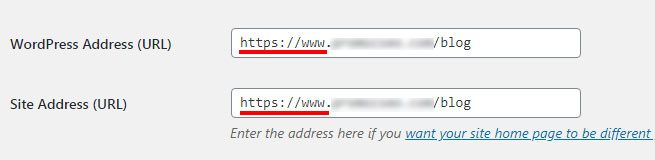

If you have a WordPress website, you can set your preferred domain through the settings.

In the case of other websites, you can do this as follows:

Non-www to www

RewriteCond %{HTTP_HOST} ^(?!www\.)(.+) [NC]

RewriteRule ^(.*) http://www.%1/$1 [R=301,NE,L]

www to non-www

RewriteCond %{HTTP_HOST} ^www\.example\.com [NC]

RewriteRule ^(.*)$ http://example.com/$1 [L,R=301]

Use these lines of codes on your website’s Htaccess file and you are done. Just make sure to change the domain and TLD with yours.

Wrapping Up

Making the crawling and indexing of your website easier for search engines is the main objective of doing the technical SEO. Google looks kindly on any website that helps their crawlers rather than hindering them. So, making these changes to your website at the very beginning will give your site a solid foundation to stand on SEO-wise.

The six technical SEO tips discussed above will help you achieve that. So, go ahead and start making the changes. You will notice significant improvements in your website’s performance in no time at all.

I hope this article helped you understand the technical SEO better. If you have any queries regarding any of the technical SEO factors, please feel free to leave them in the comments down below.

Please let us know your experience of fixing the technical SEO issues of your website. Did it help? Did you face any specific problem while doing them? Tell us in the comments.

An advanced All-in-One Digital Marketing Course.

Mentored by Mr. Soumya Roy, the Founder, CEO of PromozSEO Web Marketing Academy.

- Reasons Your Business Needs to be on Instagram - September 16, 2021

- 7 Reasons Your Business Should Invest in Professional Content Creation - August 12, 2021

- 5 Ways You Can Improve Your Website’s Existing Backlinks - April 30, 2021

2 Responses

Hello! Soumya sir, I have gone through your article it is very cognitive. you discussed about canonical tag thoroughly I have used in my web but I did not know that much. The concept of redirection is very important in SEO. The importance of which most of the SEO experts never know. thanks for providing us such kind of information is amazing.

Thanks, buddy. Glad it helped you.

Comments are closed.