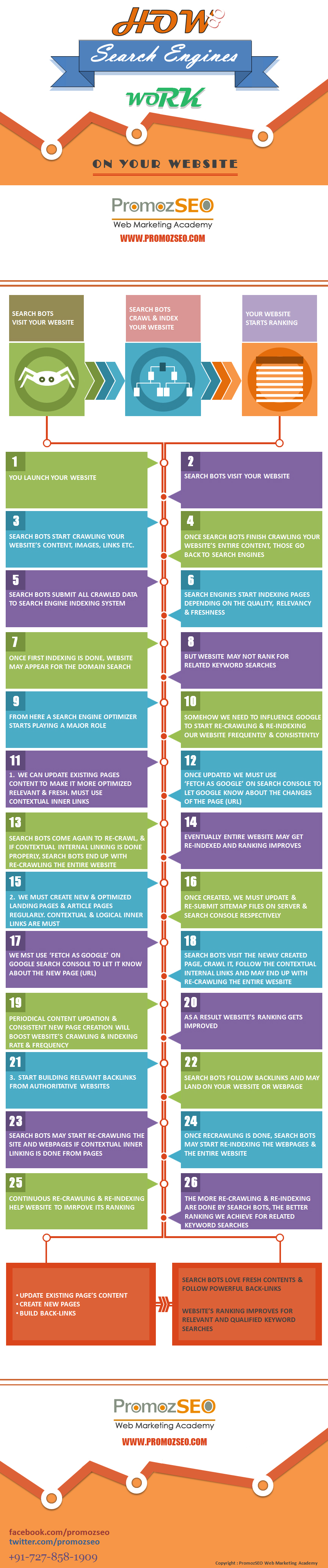

How Search Engines Work on a Website - 3 Must Do SEO Techniques to Improve Site's Crawling and Indexing Rate

Search Engine Optimization or SEO is one of the most discussed topics when it comes to digital marketing, website promotion, lead generation and blogging. SEO is one of the most reliable techniques which get maximum web traffic to one website and most of that traffic is targeted and easily convert-able. But to do the SEO on a website properly, first we must understand how these Search Engines work on our website and how we can build our websites to get the best organic results.

The biggest and major Search Engine, Google has around 200 different ranking signals which it uses to rank one website. But before understanding those ranking factors, one should understand the very basics of Search Engine Optimization process.

Search Engines work very similar to one human being. If we have a long content and if we read and memorize it only one time, we may answer very few questions and even those answers’ quality may not be good. But if we read the content many times, and if we start memorizing it frequently, then we will be able to answer all the questions and at the same time the answers’ quality will improve remarkably. This means that, the more we read, the more we memorize, and the better we answer. So similarly, Search Engines must read (crawl) our website’s content again and again, and memorize (index) it very frequently to rank the site high on Search Engine Results Page for relevant keyword searches.

Here we are going to discuss about how Search Engines work on our website and what Search Engines actually seek from our website. If you are a beginner on SEO, this may help you to understand the entire process clearly. So let’s start.

Embed PromozSEO's Infographic On Your Site.

Download This Info-graphic

What Happens When We Launch Our Website First Time on Internet

- First we launch our website on internet, after doing all the initial on-page optimization and some technical optimization.

- Search bots are always in search of new websites on internet. So, once the site is launched and live, search bots find and visit the website.

- Search bots crawl the content (text, images, links, and other media files) of the website.

- Search bots go back to the search engines and those start storing the crawled data on Search Engine’s indexing system.

- Search Engines may start indexing all the pages of a website depending on the contents’ quality, priority, freshness and relevancy.

- Once the first stage of indexing is done by Search Engines, the website may start ranking when searched with the website’s domain.

At this point one fresh website may not rank for any related keyword searches, because the site has been crawled and indexed only one time.

This is when a search engine optimizer needs to start the next optimization process to improve the ranking of the website or web-pages for relevant keyword searches. Somehow we have to ensure that our website is getting re-crawled and re-indexed again & again frequently. And there are three different ways to ensure the frequent re-crawling and re-indexing by Search Engines.

Update Existing Page’s Contents

- We should periodically update and modify our existing and live pages’ contents to make those more relevant and fresh.

- We must let Search Engines know about those content changes on the webpage (URL). We should use Search Engine’s Webmaster tools for this. For Google, we must use ‘Fetch as Google’ on Google Search Console to let it know about the newly updated page/s. We should submit the updated page’s URL on Search Console’s Fetch as Google section, and request Google for quicker re-indexing.

- Once Search Engines know about those content changes, search bots start visiting and re-crawling the already crawled page once again.

- If we create internal links to link one page from another page’s content (contextual internal link), search bots may follow those internal links and may end up with re-crawling the entire website once again.

- As a result the whole website may get re-indexed by Search Engines.

- If we can ensure this process repeatedly and continuously, then our website starts ranking for keyword searches.

Create New Pages

- Apart from updating the existing pages’ contents, we must create new pages with fresh and optimized contents consistently on our website.

- Once we create a new page (URL) on our site, we must update the sitemap.xml file on server. For WordPress and similar CMS, this process is automatically done by the tools.

- Once sitemap.xml file is updated on server, we should re-submit that on Search Engines’ Webmaster tools.

- After this, we must use Google’s ‘Fetch as Google’ or similar techniques to let Search Engines know about the newly created page URL.

- Once Search Engines know about the new page URL, search bots start visiting and crawling the fresh content as early as possible.

- If we have used contextual internal links from the newly created webpage and the other pages of the site, search bots may follow those inner links and finally may end up with re-crawling the entire site once again.

- Another re-indexing of the website may get done by the Search Engines, and eventually website’s ranking improves.

Optimized content updation and new content creation come under onpage SEO.

Build Relevant Back-Links from Authoritative Websites

Back-link building (Offpage SEO) is another crucial SEO technique. Back-links work similar as references in real life. Search Engines use those references to measure the popularity, relevancy and authority of the website. The more back-links you build from unique websites for your site (or webpages), the better ranking you can expect. One thing must be remembered here that, the number of back-links is definitely important but relevancy of those is more important and the authority of the linking domain is the most important factor.

- Say you built a back-link for your website on example.com.

- While Search Engines crawl the example.com site, those find the back-link you already built there, for your website.

- Search Engine may follow that back-link and may land on your website (on the linked page).

- Search Engines start re-crawling the linked page again.

- If Search Engines find contextual internal links, those may follow those inner links and may re-crawl the whole website once again.

- Automatically another re-indexing is possible and site’s ranking improves.

Conclusion

Search Engine Optimization is a continuous process, and if we can keep Search Engines busy on our website with fresh contents and back-links we built, our website’s ranking automatically improves. So update your existing pages’ contents, create new pages with fresh and unique contents and keep on building relevant back-links from authoritative websites.

Want more info